-

Posts

88 -

Joined

-

Last visited

-

Days Won

3

Everything posted by Jaybone

-

Skipping TS because no associated TS. What?

Jaybone replied to Jaybone's topic in Configuration Manager 2012

Annnnd, there it is. I don't remember having to ever mess with that before, but yeah, boot image was not set in the properties of the copied TS. Thanks! -

Hi, all. Weird thing... We recently updated from non-R2, SP1 CU5 --> SP2 --> R2 SP1 --> CU1 --> driver bloat hotfix. This morning, I copied an old TS, made some tweaks to the copy, deployed it, and.. nothing. It doesn't show up in the list. Thinking it might be the time bug I'd read about, I changed the deployment to so that the available time was yesterday morning. Still nothing. Checking SMSTS.log, I found something I just don't understand. "Skipping Task Sequence XXX000C0 because it does not have an associated task sequence" Uhhhhhh, what? A new, scratch-built TS with all settings the same as the copied one shows up immediately and runs just fine. Old task sequences that existed before the upgrades are all still available and run just fine. Anyone know what this error is trying to say, and where to look to try to resolve it so that i can actually use copied sequences?

-

WinPe reboots when establishing network connections

Jaybone replied to richard.davignon's topic in Configuration Manager 2012

There are no task sequences available to this computer.. Please ensure you have at least one task sequence advertised to this computer. That's probably it. If you've got systems that aren't marked as approved, this'll happen. This can happen if, say, you're not joining them to the domain as part of the task sequence, or manually after imaging. I might be off here, but I want to say that the default settings are to auto-approve domain-joined machines, but not any others. Solution for that particular situation is to either Join them to domain in TS. Join them to domain manually. Right-click the system in the console and Approve. Delete from Config Manager so they show up as new. I usually just delete them - even when manually approving, it can take a few minutes before that pushes all the way through and allows you to deploy. -

Also prevents auto-detection from picking the wrong driver because it thinks it's better. Example: Lenovo T440 driver package has a chipset driver that gets auto-picked because it's "better" when deploying to Dell Optiplex 7010s. Except that the "better" driver causes a BSOD on the 7010 units. We can get around that with category filtering, but that means that either we need to have separate task sequences for the different models, or we have to edit the TS to enable/disable the appropriate category before deployment. Using WMI filters like this, everything just works.

-

Machine not picking up all/correct drivers during deployment

Jaybone replied to krdell's topic in Configuration Manager 2012

Can you try making a new task sequence that only has that single driver package enabled, deploy that TS to the machine, and see if that works? We ran into something possibly similar. If we use automatic driver detection and have our Lenovo T440 driver package enabled in the TS, it'll cause Dell Optiplex 7010 units to bluescreen, since it picks a chipset or HDC (forget which, at this point) driver from the T440 package that doesn't play nice with the Dells. I'm thinking that you may be running into something similar that's resulting in incompatible drivers getting picked. Limiting the TS to use only drivers from that specific package should rule out that possibility. -

Kinda - he's right, as far as he goes. Lenovo does it their own way, different from everyone else. Dell, HP, and anything else I've ever run across uses Model to store something meaningful for comparison - "Optiplex 7010," etc. Lenovo, however, uses Model for a specific version of a model. E.g. my X1 Carbon has "3444CUU" in there, but I need to look in ComputerSystemProduct's Version to get "ThinkPad X1 Carbon." Same for our M9x's and every other recent Lenovo I've worked with.

-

Lenovo Thinkpad 10 PXE Boot Problem...

Jaybone replied to ebanger's topic in Configuration Manager 2012

Just a guess, but I'd think that PXE booting via USB isn't going to work. I could be (ha! I'm certain of it! underinformed, but I've never heard of any system that can do that. You'll probably need to create a bootable USB drive (with the proper USB network drivers included) to start from, and go from there.- 3 replies

-

- PXE-E10: Server response time

- Lenovo Thinkpad 10

-

(and 1 more)

Tagged with:

-

Not to say that these are the only reasons, but we've seen similar behavior in our environment a few scenarios: 1. There are a handful of updates that require a reboot before other updates can be installed. If one of these is being installed during OSD and you haven't accommodated for this, you'll end up needing to do more patches later. You can either modify the TS to do multiple rounds of patching with planned updates in the TS, or update the image to include more patches from the get-go. 2. Something else (e.g. software installation that runs before patching) causing a delayed reboot that occurs before patching finishes. 3. Depending on your setup, Office updates may not get installed during the task sequence at all, and you can end up with only Windows updates being installed, and then needing to do the Office patches later. If this is the case, you should be able to change a setting during the task sequence to allow Office patches to run during your Apply Updates step - there's at least one semi-recent thread about this. If I can rememeber long enough, I'll see if I can dig it up.

-

Console confused about client version

Jaybone replied to Jaybone's topic in Configuration Manager 2012

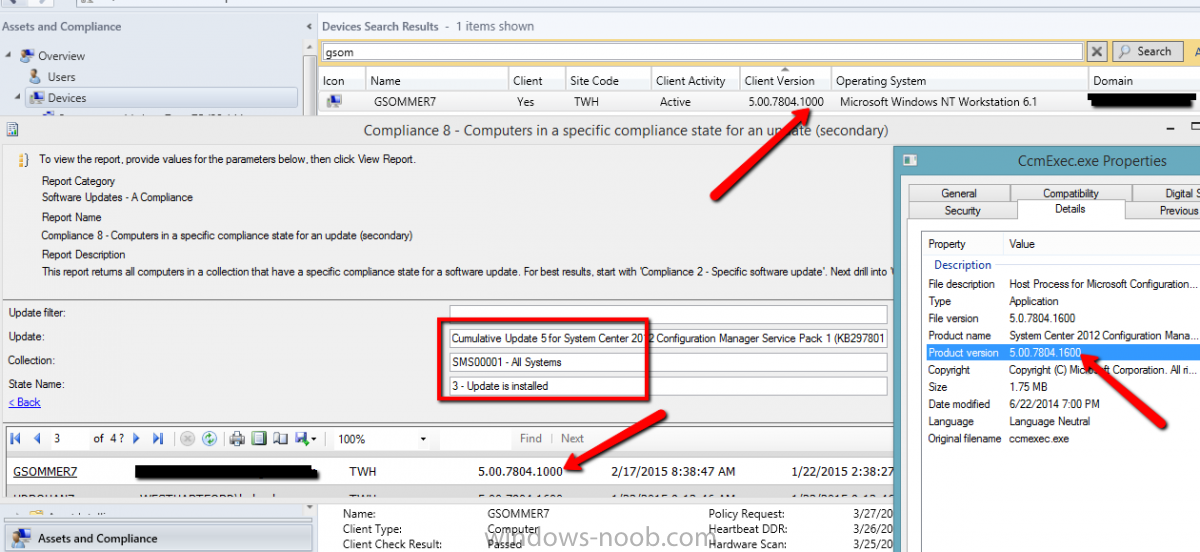

That's exactly it. Most devices show the correct build number (1600) in the "Client Version" column when the device list is viewed in the console, and in that same field if the device properties are viewed, and in that field in the Complicance 8 report. But many don't, and instead show build 1000 even though they're really updated to CU5/build 1600. This even months later, after many heartbeats (set to happen once a day). -

Console confused about client version

Jaybone replied to Jaybone's topic in Configuration Manager 2012

It's normal that ~75% of my clients that successfully upgraded to CU5 will show as version 5.0.7804.1600, and the remainder will show as version 5.0.7804.1000? Sorry, but I have a hard time believing that. -

Console confused about client version

Jaybone replied to Jaybone's topic in Configuration Manager 2012

When it works, sure, but that's not the issue here, unfortunately. These computers were updated months ago. You can see in the report window for this client that the last state received and last state change were back in February and January, respectively, and you can see in the client window that the last heartbeat was on 3/26. I manually initiated a DDC anyway for the heck of it on Friday 3/27. Didn't help. -

Hi, all. SCCM 2012 SP1 A few months back, we deployed CU5 to clients via SCUP. Most clients upgraded without issue. There are a bunch, though, that the console seems confused about: Client version listed under Devices, and in the properties of the device is .1000. Client version shown on a compliance report run against the deployment is .1000, even though the device is shown as compliant. CCMexec.exe version on the device is .1600. Anyone know how to get this sorted out?

-

Dumb question, but have you tested to see if any of the post-SP2 updates fix the problem?

-

Office updates during build and capture

Jaybone replied to apug666's topic in Configuration Manager 2012

LOL, yeah. In the GUI, it's a simple checkbox - "give me updates for other MS products when I update Windows." Getting to it from the backend requiring some arcane vbs involving a UUID and the number seven is kind of ridiculous. -

Are they actually distributed to your DP?

-

%~dp0 is an identifier signifying "wherever this file lives" that's typically used in .cmd/.bat files. E.g. you've got a script that runs an install routine with a .msi file, then copies another file - all three of these live in the same folder. You want to make it so that if the files get moved, or if they're accessed with different methods (unc path vs drive letter), stuff will just keep working. Using Flash Player as an example: %~dp0install_flash_player_16.0.0.305_active_x.msi /qn copy %~dp0mms.cfg %systemroot%\System32\Macromed\Flash\ if exist %systemroot%\SysWOW64\Macromed\Flash\ copy %~dp0mms.cfg %systemroot%\SysWOW64\Macromed\Flash\ First line says "run the file that lives in the same folder that this script does named install_flash_player_16.0.0.305_active_x.msi" Second line says "copy the file that lives in the same folder that this script does named mms.cfg to %systemroot%\System32\Macromed\Flash\" Third one does the same as the second for a 64-bit version of windows. So you can run this from \\server\share\Flash\16\FlashInstaller.cmd N:\Flash\16\FlashInstaller.cmd (assuming N:\ is mapped to \\server\share) D:\shares\Apps\Flash\16\FlashInstaller.cmd (if you want to run it on the server that's hosting the share) or you can move all three files to \\someOtherServer\someOtherShare and run from there, without having to change the paths in the script.

-

Are these systems that you're deploying via task sequence? I ask because we saw something similar, where clients were installed, semi-communicating (clientlocation.log looked good, etc), but just not working, and console display of them was weird like this. Going to the endpoints and looking in the CM control panel, there were only five actions available where one would normally expect either two (endpoint's freshly imaged, not approved, etc) or ~ten or eleven (normal working client). We narrowed it down to some clients that may have been reimaged after our SCCM server crashed (bad RAM) and auto-recovered - a bunch reimaged after a clean manual shutdown of the server and normal startup of it worked at least. By FAR, though, the biggest culprit for us was the deployment TS having an action (in this case, installing SEP 12.1.5 from .msi without having "SYMREBOOT=ReallySuppress" on the commandline) that caused an unplanned reboot before the task sequence was able to complete most of the time. Once that was dealt with, we haven't had this come up again. FWIW, using psexec to uninstall the affected clients: psexec \\bustedClient c:\windows\ccmsetup\ccmsetup.exe /uninstall then doing a reinstall of the client got them fixed right up.

-

Application deployment with Bat file dependency

Jaybone replied to SCCMalfunction's topic in Configuration Manager 2012

I'm not following exactly what you mean. Dropping the marker file fails, or your detection logic fails to see the marker file? If it's failing to drop the marker, make sure you're trying to put it somewhere that's actually accessible to the process running the uninstall routine. -

Office updates during build and capture

Jaybone replied to apug666's topic in Configuration Manager 2012

We dealt with this a while ago, and yeah, it's annoying. I think I actually found the liknk to the solution on these forums, but I can't remember. Anyway, if you grab the script here: https://msdn.microsoft.com/en-us/library/aa826676%28VS.85%29.aspx?f=255&MSPPError=-2147217396 Make a package with it, and run that package in you TS before you run the Install Updates step, you should be all set. And in case that link dies: Set ServiceManager = CreateObject("Microsoft.Update.ServiceManager") ServiceManager.ClientApplicationID = "My App" 'add the Microsoft Update Service, GUID Set NewUpdateService = ServiceManager.AddService2("7971f918-a847-4430-9279-4a52d1efe18d",7,"") -

Hi, all. SCCM 2012 SP1 with CU5. I'm seeing weirdness when setting up device collections that attempt to include systems based on both 32 bit and 64 bit applications being installed. I'm trying to combine "Installed Applications.Display Name" (SMS_G_System_ADD_REMOVE_PROGRAMS.DisplayName) with "Installed Applications (64).Display Name" (SMS_G_System_ADD_REMOVE_PROGRAMS_64.DisplayName), but the result isn't what I'm expecting - the collection actually shrinks, instead of growing, which I would think is impossible, since it's an OR. Example using Java: Criteria "Installed Applications.Display Name" like '%java 8' I get 34 members in the collection If I then change the criteria to "Installed Applications.Display Name" like '%java 8%' OR "Installed Applications(64).Display Name" like '%java 8%' Update membership, and the collection drops to 26 members. Some of the dropped ones are systems that I know for a fact have 32-bit Java 8 installed. All of the dropped systems are running a 32 bit version of Windows. I would expect that this would get *any* systems, regardless of OS architecture, with 32-bit Java 8 (since these match the statement before the OR) plus any systems with 64-bit Java 8 (since they match the statement after the OR). What appears to actually be happening is that once the statement to check the 64 bit app list is added, all systems that are running a 32 bit OS are removed from the collection, even though they do match the first criterion. Is this intended behavior? Do I need to massage the query statement? To this point, I've just been using the auto-generated query statement that setting up via the GUI comes back with. Or is this just not possible, and I'd need to create two (32 bit via query, 64 via query + include other collection) or three (32, 64, both via inclusion) separate collections?

- 4 replies

-

- collection

- query

-

(and 3 more)

Tagged with:

-

Hi. Ran into a weird thing. We're deploying Config Manager updates via SCUP, and a number of our clients (all of them 32-bit so far) were failing to install the SP1 CU 5 patch for the CM client. The error in the event log was a 1706 saying it couldn't find a valid client.msi package. Digging around in the registry, all of these clients that I've seen so far had an installsource value in HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Uninstall\{FD794BF1-657D-43B6-B183-603277B8D6C8} of "\\siteServer\Client\i386" - this share was indeed inaccessible Clients that patched successfully (both 32 and 64 bit) had a value of "c:\windows\ccmsetup\{Product Code for 32 or 64-bit client}" in there, instead. Changing the reg key on problem systems to point to the local copy of the .msi did not work, though I didn't try rebooting them, since they're all live systems. Changing the NTFS permissions on the \\siteserver\client share to grant everyone read (share permissions were already everyone=read) allowed these systems to patch. A system I was testing with and had changed the reg key to point at the local copy of the MSI actually changed that key back to \\siteServer\Client\i386 after patching was complete. So what I'm wondering, is: Is the differing InstallSource location normal behavior? Should the client share have had Everyone with Read on it already? If 2. is "yes" then what's the best way to make sure permissions aren't hosed elsewhere as well? Just wait til something breaks? Reset? Other? If 2. is "no" then what are potential repercussions of granting everyone read access? I'm thinking nothing, but...

-

- client share

- permissions

-

(and 2 more)

Tagged with:

-

Unable to find content after package install

Jaybone replied to epoch71's topic in Configuration Manager 2012

I've never used those guides, but just a thought - can you create an admin installation point for them on the network, and set your package to use that as an alternate source? -

Hi. CM2012 SP1. Trying to generate reports of OSD task sequence usage, it appears that the deployment names are static, and don't actually reflect the current name of the TS. E.g. if I create and deploy a TS named "TEST - Win7 64-bit" and then after testing is done, change the name to "Windows 7 64-bit, Off2013, HR apps," the reports don't see this name change. So running the "History of a TS deployment on a computer" or "Deployment status of all task sequence deployments" and the reports linked from it, they all reference the old names of the task sequences, instead of the current one. As far as I can tell, the name that the reports show is the name that the task sequence had when it was initially deployed. E.g. if I create a new TS, change the name a few times, deploy it, and change the name again, the reports have whatever name was current when I created the deployment, not the ones it had before then, or what it was changed to after it was deployed. Aside from creating a new deployment when the TS name is changed (incidentally, deleting the old deployment removes all results from reporting) , does anyone know of a way to cause the system to show the current name assigned to the TS, or am I stuck having to cross-check Deployment IDs?

-

- OSD

- Task Sequence

-

(and 3 more)

Tagged with: