wilbywilson

Established Members-

Posts

135 -

Joined

-

Last visited

-

Days Won

4

Everything posted by wilbywilson

-

You may want to try this command: /passive /norestart /quiet as documented here: http://www.itninja.com/software/foxit/reader-3/6-3442 As for the "failed" installation status, it's likely something with the Detection Method that you're using. Go through this document, to understand what's likely happening and how to fix that: http://myitforum.com/myitforumwp/2013/03/13/sccm-2012-application-deployment-detection-methods/

-

Join workgroup and domain later

wilbywilson replied to blind3d's topic in Configuration Manager 2012

I've never done this personally, but here is a thread that talks about possible solutions for this: http://serverfault.com/questions/483730/deploying-windows-via-sccm-2012-osd-wireless- 2 replies

-

- tablet

- windows 8.1

-

(and 1 more)

Tagged with:

-

SCCM 2012 R2 SP1 Internet Based Client Management

wilbywilson replied to WoodyW's topic in Configuration Manager 2012

Woody, It's been a couple years since I set up IBCM, but I posted a thread with some links and comments to help others: https://www.windows-noob.com/forums/topic/10630-ibcm-deployment-results/?hl=ibcm Hopefully this helps you out. I don't have direct access to the SCCM infrastructure that I helped to configure anymore, so I can't answer your questions specifically. -

Using ConfigMgr to keep drivers up to date

wilbywilson replied to surfincow's topic in Configuration Manager 2012

While I've used SCCM and SCUP (with Shavlik integration), I've never tried to deploy driver updates in a business environment. There's just a lot that can go wrong, and the users can end up with unusable machines. A few years ago, the security team brought it to our attention that a certain Radeon/NVidia driver (I don't remember which model/manufacturer, but we had a couple thousand in our environment) had a security issue. They wanted the SCCM team to mass-update the driver. We tried on a small test group, with very mixed results. In the end, it was decided that the chances of this exploit taking place were slim, and we didn't try to push out video card drivers through SCCM. If the security exploit in question is really serious, then I would suggest an SCCM package versus SCUP. The only positive experience I've had with SCUP is integrating it with Shavlik (paid service). Through that, we've deployed Adobe, Apple, and Java patches relatively successfully. I've had poor experiences with the DELL and Adobe-manufactured catalogs. (I think it tells you something when a third-party company is coding/testing the updates for these manufacturers. These big companies don't have a good track record for their own SCUP catalogs.) -

I would suggest breaking them into different update groups, but not necessarily 1 per operating system. The way I like do it, is to put all of the "workstation" (Win7, Win8, Office, etc.) patches into 1 group, all of the "server" (Windows 2008, Windows 2012) patches into another group, and perhaps special/custom apps (IIS, SQL, Exchange, etc.) into yet another group. Once those are built and deployed to some test machines, then I set up a deployment for each group. The deadlines for workstations is obviously different than servers, so that's why I like to build my update groups like that. So you may be different, but I like to build my update groups based on the "type" of machine they were targeting, and then set my deadlines accordingly. And yes, I do recommend consolidating these update groups at least once a year; otherwise there are just too many deployments running, and it's hard to make sense of what's what.

- 5 replies

-

- SUP

- Software Updates

-

(and 1 more)

Tagged with:

-

Windows Updates - Advise on pre-prod / testing

wilbywilson replied to Rumpole's topic in Configuration Manager 2012

You're right. There's more than 1 way to skin a cat. I think the optimal solution is dependent on the size of your environment, and how involved your (change) management team is. I used to a work in a large environment, and we were not allowed to use ADRs to deploy Windows patches (we had ADRs for Endpoint definitions, but not for full-on Windows patches.) You might think putting together a package each month is extra work, but having the control over exactly which patches you select is worth it. Microsoft will inevitably stick in some patches you probably don't need/want, if you're not careful. We would target the patches to a test collection, for at least 5 business days. We'd actually run some reports on that test collection, showing that X percentage of that collection received the patches, and review the HelpDesk tickets to make sure there were no common/widespread problems. If all was well, we'd then create a *new* deployment, targeting the remaining machines. (Actually, 1 deployment for servers and another for workstations, with the difference being the enforcement deadline.) But splitting them into separate deployments is key, because if management every comes back to you when there is an unexpected problem, you'll be able to show them your test deployment statistics, with the associated SCCM reports you already have in hand. If you just re-use the existing deployment and re-target it, your numbers/reports will be skewed. So I always created new advertisements when moving to production. Best to cover your bases, before an ADR distributes something while you're on vacation, and blows something up -

Yep, that's all you need to do. It's quite straight forward (compared to the rest of the OSD task sequence): https://www.vmadmin.co.uk/microsoft/64-mssystemcenter/353-sccmwmicwmiquerydrivers

-

Anyweb, I hear what you're saying, and I totally agree in principle. My first suggestion to the management staff was for 1 single drive. I don't know if it's because of previous standards already established, or something that's being passed down from "corporate." If I can give them a number of compelling reasons on why they SHOULDN"T have 2 partitions in the main OSD image, I may be able to convince them to change their minds. So, I guess I have 2 questions now: 1) What specific complexities/problems does having 2 partitions (as opposed to 1) in the OSD image have? 2) If I can't convince management to go with 1 single C partition, what's the best method (ideally automated through the task sequence) to assign the Windows pagefile to the D partition? Thanks!

-

We've got a working OSD Task Sequence (integrating MDT 2013), which has a C (OSDisk) partition, as well as a D partition, per the company's requirements. Right now, OSD is putting the Windows pagefile on the D partition. But we would like for the pagefile to automatically be placed on the C drive. How can one accomplish that? Is there a step in the task sequence that needs to be customized/added? Thanks!

-

Slow Driver Download in SCCM 2012 R2

wilbywilson replied to commissar117's topic in Configuration Manager 2012

In your task sequence, are you letting the machine search through all of your driver packages? Are are you adding a explicit query for each machine model, to specifically only search inside that model's driver package? -

SCCMrookie, I think 30 minutes for a light build is pretty accurate. Mine takes closer to 60 minutes, but we have apps like Office 2013, Adobe Acrobat, etc. One of the benefits of SCCM is that you can integrate it with MDT 2013, and make a user-driven menu, so that you can select which apps you want to install during each build. There are pros and cons for each software. SCCM may be a little slower than Acronis, but the customization options make up for it (in my opinion.) As for the "real world" question, I think it's dependent on the environment, and your switch speed and hardware. If I were the IT guy, on my first "mass push", I would do that during the evening, or on a weekend. As with all things in SCCM, test it out and see what the limits are, and then you can determine if it's something you can run during working hours, without negatively affecting the environment. From what I've seen, SCCM imaging hasn't stressed my environment, though I'm not using multicasting at this point.

-

Question about Application Supersedence

wilbywilson replied to P@docIT's topic in Configuration Manager 2012

P@docIT, Be very careful with the supersedence settings. There are definitely some "gotchas", where the app will deploy to machines that aren't even within targeted collection. See here: http://www.windows-noob.com/forums/index.php?/topic/10199-problem-with-supsersedence/?hl=%2Bapplication+%2Bsupersedence http://www.windows-noob.com/forums/index.php?/topic/10912-potential-issue-with-superseding-applications-be-careful/?hl=%2Bapplication+%2Bsupersedence- 4 replies

-

- application deployment

- supersedence

-

(and 1 more)

Tagged with:

-

It sounds like your OSD advertisement is for "Unknown Computers." So, if you try to deploy Windows 7 to a machine that's already in he SCCM database, it will fail. This is by design, and it my opinion, it's a good failsafe. This way, you won't accidentally wipe out a system by mistake. You need to manually delete a previously existing machine from SCCM, before deploying the operating system.

- 1 reply

-

- macaddress

- deploy

-

(and 2 more)

Tagged with:

-

Collections of the machines of all employees

wilbywilson replied to Polarman's question in Collections

What differentiates the student's machines from the employee machines? Do the names of the employee machines contain something unique? Is there a piece of software on the employee machines that does *not* exist on the student machines? I think you're going to need to find a unique difference, and query for that. -

These articles may help you out: http://blogs.technet.com/b/wsus/archive/2013/08/15/wsus-no-longer-issues-self-signed-certificates.aspx http://windowsdeployments.net/signing-certificate-issues-with-scup-2011-on-server-2012-r2/ (If you've got a certificate server in your environment, I would use that to generate the cert.)

-

Collection Membership Questions

wilbywilson replied to ramadori's topic in Configuration Manager 2012

No, separate deployments should show up simultaneously, even if the deadlines are different. Sounds like something might be wrong with the deployment itself. Are you positive that you're targeting the appropriate collection (with the specific machines in it)?- 6 replies

-

- Configuration Manager 2012 R2

- Assets and Compliance

- (and 2 more)

-

Content is not fully distributing to 2nd DP

wilbywilson replied to firstcom's topic in Configuration Manager 2012

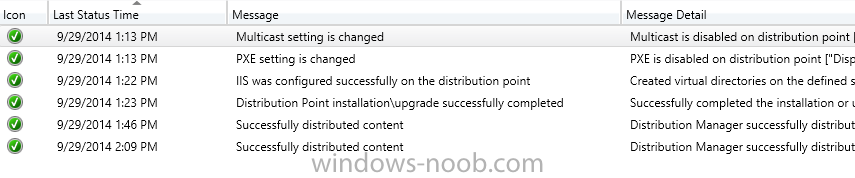

OK, so directly after that, the next line should be "successfully distributed content", as there are a couple of SCCM Client packages that are automatically distributed every time you build a distribution point. If those initial/automatic packages didn't transfer, then future packages/apps that you've been sending will also fail. When you built the DP, did you first install IIS on the Windows 2012 server? As you're creating a new DP, in the dialogue options, there is a checkmark for "Install and configure IIS if required by Configuration Manager." Did you check that box? -

Collection Membership Questions

wilbywilson replied to ramadori's topic in Configuration Manager 2012

1. It's very common for a machine to be part of multiple collections in SCCM. So, as far as I understand, if 2 different deployments target the same machine, whichever deployment has the first deadline (assuming maintenance windows are met) will take effect. Whenever the second deployment deadline comes around, the machine should already meet compliance, and ignore those specific updates. 2. Software Update Groups with expired or invalid updates should not affect the other updates within that group. Those particular expired/invalid updates will stop trying to deploy themselves, but the other updates are still active/valid. A lot of people highlight expired/invalid updates, and "Edit Membership" to remove them from the Software Group. I think that's probably a good practice, but if you don't do that, the other updates in that group will still deploy themselves normally. Per your problematic Office updates, I can't explain what's happening with that. What if you look at another machine in that collection? Are the Office updates showing up the Software Center (or did they already install)?- 6 replies

-

- Configuration Manager 2012 R2

- Assets and Compliance

- (and 2 more)

-

Content is not fully distributing to 2nd DP

wilbywilson replied to firstcom's topic in Configuration Manager 2012

It kind of sounds like your distribution point wasn't installed successfully in the first place. What operating system is this DP? Is it fully patched, and has IIS been installed/configured? Also, when you go the Monitoring section, Distribution Point Configuration Status, go to the new DP, and look at the "Details" tab. What do you see there? Particularly the first few lines, you should be able to tell whether the DP installed correctly. (Check out the attached photo.) -

I would use 2 things: maintenance windows and deadlines. Set your maintenance windows for weekends (or night times); that way patches will never get installed during the day time. If you don't want anything to install until half-term, then don't make the patches available until then. You can schedule the patches to become available at X day/time, with a deadline of Y day/time. In your case, schedule the updates to become available at the start of the half-terms, with a deadline a day or so later. When the maintenance window starts, they will begin to install and reboot. (If you don't have a maintenance window, they will begin to install/reboot as soon as the deadline is reached.) If your WOL isn't working, you just need to make sure the machines are turned on, and the rest should take care of itself.

-

I'm not 100% clear on the Content Validation task. I mean, I understand that it can be scheduled to run on my distribution points, to compare/validate files against the main source/package. But, is there any ill-effect to running the Content Validation task on the Primary itself? What does it validate against (the original source folder that's on the Primary server)? I don't want anything to get "screwed up", if I shouldn't be validating the content on the Primary itself. So if that's a no-no, please let me know. Thanks for any advice.

-

Content is not fully distributing to 2nd DP

wilbywilson replied to firstcom's topic in Configuration Manager 2012

Well, if it's been trying to days/weeks, has there been progress? If you go the Monitoring -> Content Status, and go into the details of the application/package that you're trying to send, it should give you a little more detail. For instance, on a really big package, it will say something like "15% sent." If I check back in an hour, it might be at "25% sent." Are you seeing progress? How big are these applications/packages? -

New Machines - Installing Past Updates

wilbywilson replied to rrasco's topic in Configuration Manager 2012

Behemyth, I think your approach is pretty common, and it's the one that I use as well. I get the same question from the security team, and it's a hard one to answer. I tend to throw the question right back at them: "What specific vulnerability/update do you want to see compliance numbers for?" I don't know how you can be expected to generate a report for every single update ever released by Microsoft. Clearly, we approve/deploy all critical security updates, but the "baseline" question is a tough one. -

Content is not fully distributing to 2nd DP

wilbywilson replied to firstcom's topic in Configuration Manager 2012

Hi Firstcom, How long has this Distribution Point been "waiting for content"? I believe that a package will attempt to re-send to a DP 100 times before it gives up. Is it possible that the connection between the Primary and DP was down for a few days? Are you sure that there are no firewall issues preventing the packages from sending over? What's the internet link between the Primary and DP? These are just a few questions that may lead you in the right direction. As GiftedWon mentions, the "distmgr.log" should also give you some clues.