Search the Community

Showing results for tags 'dell'.

-

I have a OSD Task Sequence for Windows 11 22H2 being deployed to new Dell Optiplex 7000's. The Dell's are going to sleep during the "Setup Windows and Configuration Manager" step of the Task sequence. I have checked the "Run as high performance power plan" box in the more options tab, but the PC still goes to sleep. I have also tried using a run command to set the power plan to high performance "PowerCfg.exe /s 8c5e7fda-e8bf-4a96-9a85-a6e23a8c635c". The run command works fine in Windows PE, but doesn't persist when the task sequence boots into Windows to start the setup phase. I tried adding the run command right before the setup phase and right after the setup phase. No matter where I put the run command it doesn't seem to matter. If I don't touch the mouse or keyboard before the PC goes to sleep the TS halts. Once I shake the mouse it will continue where it left off. If I put the run command just above the "Setup Windows and Configuration Manager" It runs fine and if I hit F8 and run "powercfg /getactivescheme" it shows that the system is in high performance mode, but then when the system reboots into the installed OS the powerscheme has reset to balanced. If I put the run command after the "Setup Windows and Configuration Manager" step, then the pc goes to sleep because it runs after the fact. I guess I am asking how to set the powerscheme to high performance once the PC has booted into the installed OS but before the "Setup Windows and Configuration Manager" portion starts to run. I saw some posts from years ago on Reddit talking about connected standby and using a registry setting to change it to disabled, however I still don't know where to put the reg tweak in my TS and it seems like the adition of the "Run as high performance power plan" option was supposed to fix this. Thanks

- 2 replies

-

- dell

- power management

-

(and 2 more)

Tagged with:

-

Hi Everyone,

I'm a new Level 2 Technician as i was previously Level 1 technician and my main role was helping users troubleshoot issues on their Computer and recently had a few colleagues from Level 3 started helping me get into SCCM environment were i flourishing from their Wisdom, so found your site and started to visit this site to learn a few things and decided to register.

Thank you for visiting my page

-

Hello Everyone, I need an answer to simple question , I'm in a process to implement MDT into my organization , in the organization we have dell latitude laptops that comes with OEM license , From my understanding the OEM license is stored on the bios of the computers. (correct me if I'm wrong) 1) Do I need to use the OEM task sequence in the MDT ? 2) How can I take the OEM license from the laptop bios and use it on the same laptop ? I just want to use the OEM license for the same laptop he comes with it , I know that this is possible but I'm not sure how to do it.

-

I'm trying to install DCIS 4.2 on my computer with System Center Configuration Manager 2012 R2 console installed. The installer runs until it gets to "Importing Custom Dell Reboot Package" and then goes right into "Rolling Back Option". I've tried this on a system with the SCCM Console installed fresh in case there was an issue with the original system's install with the same result. Has anyone run across this? Do I need to upgrade my DCIS version the actual management point server first? Thanks in advance. Ryan

- 1 reply

-

- sccm 2012 r2

- dcis

-

(and 1 more)

Tagged with:

-

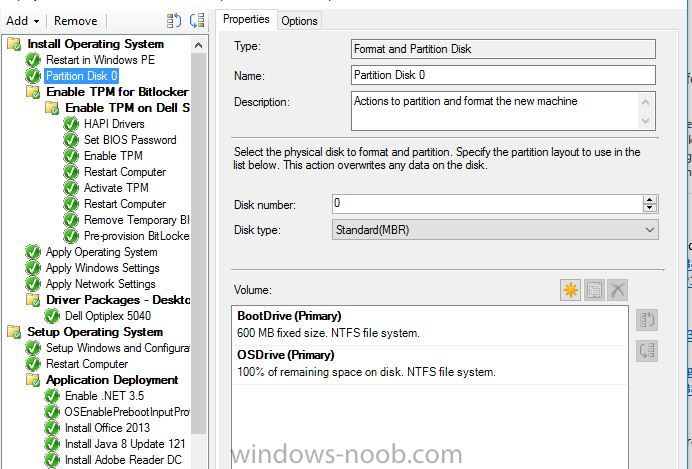

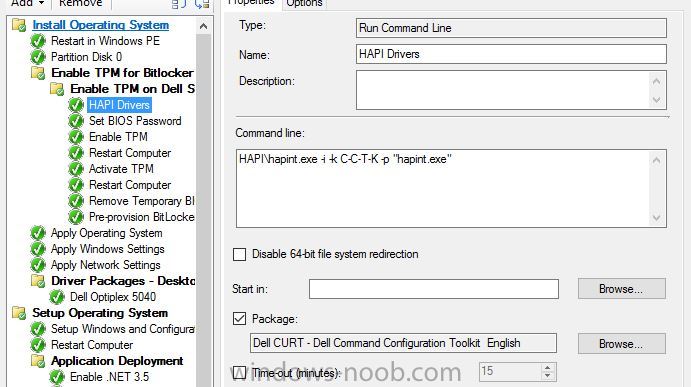

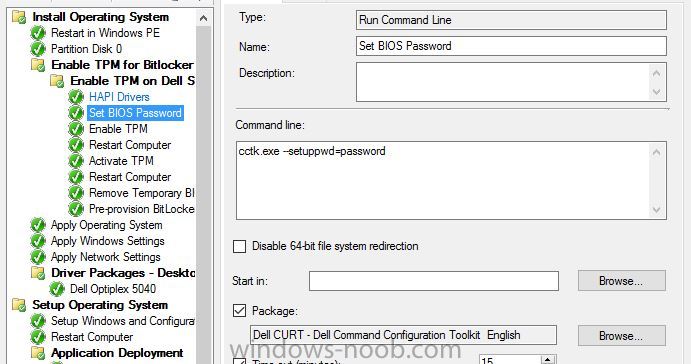

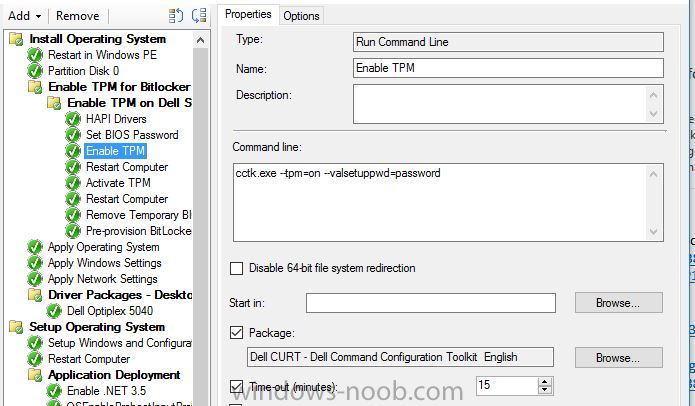

Hello, I've looked on many of forums and I am trying to find a way to enable bitlocker using a task sequence so I don't have to manually do every single laptop separately. I did download and created a package using the Dell CCTK and created a package using the Dell\X86_64 and include all the contents inside. I then add it to the TS and fails. Just dont get why it isnt working and any help would be amazing. Thanks!

-

Hello, I'm having a problem enabling BitLocker on Windows 10 v1607 during the task sequence for one model laptop: Dell Latitude E5450 -- except that it does work about 10% of the time. I haven't been able to narrow it down to a specific hardware problem and different BIOS update versions and drivers also result in mixed successes (even on the same exactly laptop). Strangely, the E5450 model has worked historically with the Windows 10 LTSB 2015 version and BitLocker. Models in our environment that work 100% off the time (with the same exactly task sequence) include Latitude E6430, E5440, and E5470. We're using SCCM Current Branch and PXE boot for OS deployment. We do not have any integration with MDT or MBAM. With the E5450s, when it fails it does so at the default Enable BitLocker step. The Enable BitLocker step is configured for TPM Only, create recovery key in Active Directory, and Wait for BitLocker to complete. Also, prior to being built, the computers will have the TPM manually cleared in the BIOS (if previously BitLockered) and the disks formatted at the beginning of the build. Here's a snippet from the smsts logs for the Enable BitLocker step on a failed build: Command line: "OSDBitLocker.exe" /enable /wait:True /mode:TPM /pwd:AD Initialized COM Command line for extension .exe is "%1" %* Set command line: "OSDBitLocker.exe" /enable /wait:True /mode:TPM /pwd:AD Target volume not specified, using current OS volume Current OS volume is 'C:' Succeeded loading resource DLL 'C:\WINDOWS\CCM\1033\TSRES.DLL' Protection is OFF Volume is fully decrypted Tpm is enabled Tpm is activated Tpm is owned Tpm ownership is allowed Tpm has compatible SRK Tpm has EK pair Initial TPM state: 63 TPM is already owned. Creating recovery password and escrowing to Active Directory Set FVE group policy registry keys to escrow recovery password Set FVE group policy registry key in Windows 7 Set FVE OSV group policy registry keys to escrow recovery password Using random recovery password Protecting key with TPM only uStatus == 0, HRESULT=8028005a (e:\qfe\nts\sms\framework\tscore\encryptablevolume.cpp,1304) 'ProtectKeyWithTPM' failed (2150105178) hrProtectors, HRESULT=8028005a (e:\nts_sccm_release\sms\client\osdeployment\bitlocker\bitlocker.cpp,1252) Failed to enable key protectors (0x8028005A) CreateKeyProtectors( keyMode, pszStartupKeyVolume ), HRESULT=8028005a (e:\nts_sccm_release\sms\client\osdeployment\bitlocker\bitlocker.cpp,1322) ConfigureKeyProtection( keyMode, pwdMode, pszStartupKeyVolume ), HRESULT=8028005a (e:\nts_sccm_release\sms\client\osdeployment\bitlocker\bitlocker.cpp,1517) pBitLocker->Enable( argInfo.keyMode, argInfo.passwordMode, argInfo.sStartupKeyVolume, argInfo.bWait ), HRESULT=8028005a (e:\nts_sccm_release\sms\client\osdeployment\bitlocker\main.cpp,382) Process completed with exit code 2150105178 Failed to run the action: Enable BitLocker. The context blob is invalid. (Error: 8028005A; Source: Windows) On successful builds, the snippet is the exact same up to the "Protecting key with TPM only" line. At that point, it continues with the following before moving on to the next step: Protecting key with TPM only Encrypting volume 'C:' Reset FVE group policy registery key Reset FVE group policy registry key in Windows 7 Reset FVE OSV group policy registery key I'm mostly wondering if anyone has seen the errors "Failed to enable key protectors (0x8028005A)" or "The context blob is invalid. (Error: 8028005A; Source: Windows)" -- or has any idea of what could cause this issue. Thank for you any help you can provide!

- 1 reply

-

- Windows 10

- TPM and BitLocker

-

(and 4 more)

Tagged with:

-

Hi We are planning a Windows 10 deployment for the not to distant future and I am working on getting a new set of task sequences setup for this, incorporating some of the niggly things we've wanted to do for a long time but haven't had the time or patience. One of those things is setting up the computers BIOS settings to our corporate setup. We are primarily all Dell computers, or varying ages, but the majority will run Dell CCTK commands, which is how we do the settings at the moment post task sequence. I basically followed this article to setup the CCTK part of our task sequence (https://miketerrill.net/2015/08/31/automating-dell-bios-uefi-standards-for-windows-10/). Under a Win PE 10 x86 boot image - I have a CCTK exe which changes the system to remove our standard password and use password. So when the rest of the commands are run (which require the password in the command line) the real password doesn't show in the log files. Another CCTK exe resets the password later. I have now found I need a x64 boot image, so I have done the normal import etc. etc. but the Dell CCTK exe which ran perfectly well under x86 now fails... When I try to run it using the F8 command prompt I get a message saying "Subsystem needed to support the image type is not present" - the exe was made on an x64 machine and should be multi platform. Has anyone else tried this and come up with a similar problem or a solution? Thanks everyone John

- 3 replies

-

- SCCM

- Configuration Manager

-

(and 2 more)

Tagged with:

-

Hello, Just wondering if anyone has manage to image the Dell XPS 13 9350? Got SCCM 2012 R2 SP1 CU2 and when using my current TS wtih Dell XPS 13 9350 it came to the first reboot (partitioning, apply wim, check drivers, install client etc...) and then fail... acording to this link that is normal for this model if you set disk opertion to AHCI which I had: https://www.reddit.com/r/SCCM/comments/40t2iv/dell_xps_9350_9550_sccm_imaging_guide/ I switched to RAID ON as suggested in the link, and added the Intel RAID driver to my WInPE. I can boot on WinPE, partition the disk but when apply WIM in SATA RAID ON mode it fails, smsts.log says the following: Expand a string: WinPE TSManager 3/7/2016 5:01:42 PM 1520 (0x05F0) Executing command line: OSDApplyOS.exe /image:P010034A,%OSDImageIndex% "/config:P010005F,%OSDConfigFileName%" /runfromnet:True TSManager 3/7/2016 5:01:42 PM 1520 (0x05F0) Command line for extension .exe is "%1" %* ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Set command line: "OSDApplyOS.exe" /image:P010034A,1 "/config:P010005F,Unattend.xml" /runfromnet:True ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Found run from net option: 1 ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Not a data image ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) ApplyOSRetry: ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) TSLaunchMode: PXE ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) OSDUseAlreadyDeployedImage: FALSE ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) 'C:\' not a removable drive ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Searching for next available volume: ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Volume S:\ size is 350MB and less than 750MB ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Volume C:\ is a valid target. ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Found volume C:\ ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Windows target partition is 0-2, driver letter is C:\ ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) !sSystemPart.empty(), HRESULT=80004005 (e:\qfe\nts\sms\framework\tscore\diskvolume.cpp,132) ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) System partition not set ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Unable to locate a bootable volume. Attempting to make C:\ bootable. ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) bBootDiskDefined == true, HRESULT=80004005 (e:\qfe\nts\sms\client\osdeployment\applyos\installcommon.cpp,690) ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Unable to find the system disk ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) MakeVolumeBootable( pszVolume ), HRESULT=80004005 (e:\qfe\nts\sms\client\osdeployment\applyos\installcommon.cpp,772) ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Failed to make volume C:\ bootable. Please ensure that you have set an active partition on the boot disk before installing the operating system. Unspecified error (Error: 80004005; Source: Windows) ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) ConfigureBootVolume(targetVolume), HRESULT=80004005 (e:\qfe\nts\sms\client\osdeployment\applyos\applyos.cpp,499) ApplyOperatingSystem 3/7/2016 5:01:42 PM 1980 (0x07BC) Process completed with exit code 2147500037 TSManager 3/7/2016 5:01:42 PM 1520 (0x05F0) !--------------------------------------------------------------------------------------------! TSManager 3/7/2016 5:01:42 PM 1520 (0x05F0) Failed to run the action: Apply Win10x64Ent 1511 + Office 2016 WIM. Unspecified error (Error: 80004005; Source: Windows) TSManager 3/7/2016 5:01:42 PM 1520 (0x05F0) Same TS works on vitually all other Dell models and google around it shows quicly that this model is an issue but most seems to have problem to just see the disk, and I do that... if I F8 in WinPe I can in diskpart see the disk.. and the partitioning parts work.. it is apply the WIM that fails. Thankful for help thanks

-

Published: 2013-06-06 (at www.testlabs.se/blog) Updated: - Version: 1.0 This post will focus on having the technical prerequisites ready and in place for a successful Domino/Notes migration. Before going into any details, if you are planning to do a migration from Domino and want to use Dell Software’s Notes Migrator for Exchange, it is important to mention that there is a requirement from the vendor to use certified people for the project. If you would like to read the other parts: Part 1: Migrations – Overview Migration Accounts I recommend using three accounts, one with Domino permissions, one with Active Directory (AD) permissions and one with Exchange permissions. Domino The Domino account should be Manager for all .NSF files (database files), Editor on the NAB (names.nsf) and Reader on all users archive files. Username example: Quest Migrator/DominoDomain This is done by following the steps below: Create a new migration account in People & Groups, select the directory and People. On the right hand side, press People – Register. Fill in a proper name, I typically create an account called Quest Migrator as shown in the example below. Finally, press Register. To configure the permissions on the NAB (directory), go to Files and select the directory (names.nsf), right click, choose Access Control and Manage. Add the account by browsing for it, give it the User type: Person and the Access: Editor. (see picture below) The final step is granting the Quest Migrator/dominodomain account Manager permissions on all NSF files that will be migrated. Go to Files and select the folder where the NSF files are located. Right click and choose Access Control and Manage. Add the account by browsing for it, give it the User type: Person and the Access: Manager. (see picture below) Active Directory For the AD account, it’s recommended to be a member of “Domain Admins”. However, this is not a requirement, because delegated permissions can be used. The important aspect is that the AD account have “Full Control” over the OUs where user objects are located. The AD account also needs to be a member of “View-Only Organization Management”. If using the provision feature within Notes Migrator for Exchange (NME), the AD account needs to have “Full Control” over the OU where the contact objects are located as well. This account also needs to have Remote PowerShell enabled, use the command: “Set-User ”SA-NME” –RemotePowerShellEnabled $True” Username example: Domain\SA-NME Migration User This user is not used for logging on interactively. The important aspect with this user is that it has the correct permissions on the Mailbox Databases. Configure the databases so that the account has Receive-As permissions, this can be done by using the command below: ”Get-Mailboxdatabase | Add-Adpermission -user “SA-MIG” -extendedrights Receive-As” Username example: Domain\SA-MIG Office 365 account Most permissions are done automatically by NME but you must manually set account impersonation. This is done by using the command below: New-ManagementRoleAssignment -Role "ApplicationImpersonation" –User SA-MIG More information about the migration performance and throttling can be found by reading the provided link in the end of this post. Throttling Policies and Windows Remote Management Another thing to keep in mind is the configuration of the Throttling Policies and the Windows Remote Management. If you are migrating to Exchange 2010, make sure to configure the Throttling Policy according to the configuration below. “New-ThrottlingPolicy Migration” “Set-throttlingpolicy Migration -RCAMaxConcurrency $null -RCAPercentTimeInAD $null ` -RCAPercentTimeInCAS $null -RCAPercentTimeInMailboxRPC $null” “Set-Mailbox “SA-MIG” -ThrottlingPolicy Migration” Also make sure to configure the Windows Remote Management with the following settings. “winrm set winrm/config/winrs '@{MaxShellsPerUser="150"}'” “winrm set winrm/config/winrs '@{MaxConcurrentUsers="100"}'” “winrm set winrm/config/winrs '@{MaxProcessesPerShell="150"}'” “winrm set winrm/config/winrs '@{AllowRemoteShellAccess="true"}'” “set-executionpolicy unrestricted” If you are migrating to Exchange 2013, the throttling policies have been changed. Create a new throttling policy and assign it to the migration mailbox “SA-MIG”. “New-ThrottlingPolicy Migration -RCAMaxConcurrency Unlimited -EWSMaxConcurrency Unlimited” ”Set-Mailbox “SA-MIG” -ThrottlingPolicy Migration” SQL Server Notes Migrator for Exchange leverages SQL for saving user information (and much more). The Native Client needs to be installed together with SQL Server 2005 or SQL Express 2005, or newer. I do prefer running at least SQL 2008 R2 and I would recommend using the SQL Server instead of the Express version, since you have more flexibility of creating maintenance jobs for example. A little heads up if you are about to run a large migration, make sure to take full backups of the NME40DB so that you have a copy of it, if anything happens and also for having the logs truncated. In smaller migration projects the SQL Express version works fine, I would still recommend taking full backup of the database or dumping it to a .bak file and then backup the .bak file. Configure the account “Domain\SA-NME” as DBCreator, for allowing it to create the NME40DB during the setup of Notes Migrator for Exchange. Lotus Notes client I would recommend you to use the latest Lotus Notes client. In my last projects I’ve been using version 8.5.3 Basic or Normal client. An important thing to never forget is to install Lotus Notes in single user mode. .NET Framework 4 Make sure to install the .NET Framework 4 since this is a prerequisite for NME. I would recommend upgrading it to the latest service pack level. Antivirus If Antivirus is installed, make sure all Quest folders and %temp% are excluded from any Antivirus scans. If not it may result in slower performance and potential disruption of migrated content. Most likely, there will be a mail gateway of some kind in the environment which takes care of the antispam. In those situations, antivirus and antispam are already addressed in the Domino environment. On the target side, Exchange probably has antivirus and antispam solution installed as a second layer protection to the Transport services. As a result, I have not encountered any problems when excluding a couple of folders for the migration from scanning process. Outlook Outlook 2007, 2010 and 2013 are all supported. I’ve been using Outlook 2010 in all my projects and it have been working very well. Configure Outlook with the “SA-MIG” account, since this is the account that will insert migrated content into the Exchange mailboxes using the Receive-As permission. I’ve been learned to create and configure a Outlook profile using the SA-MIG account. Make sure to configure it for not using the cached-mode. However, in theory, a profile should not need to be created in advance, because NME creates temporary profiles during the migration. However, this step shouldn’t hurt anything either. User Account Control (UAC) It’s recommended to disable UAC on all migration servers. This is done in the Control Panel under User Accounts, Change User Account Control settings. Make sure to set it to “Never notify” and then restart the sever. Data Execution Prevention (DEP) It’s highly recommended to disable DEP, so make sure to do that. If you’re using Windows 2008 R2 like I do, then you disable DEP by running: "bcdedit /set nx AlwaysOff" Also, make sure to restart the server when this is done to allow it to take effect. Local administrator If you choose to delegate the permissions instead of using the Domain Admin group for the SA-NME account, then it is required to add the SA-NME account into the local administrators group. Regional Settings During the migration, the folder names (Inbox, Inkorgen etc.) are created based on the regional settings on the migration console. So, for example, if you are migrating a UK/English mailbox, make sure to configure the regional settings to match this and for example, if migrating a Swedish mailbox, set it to match the Swedish locale settings. With this said, I would recommend migrating users using the same language at the same time. And then change the regional settings on the migration console and continue with another region. Office 365 Prerequisites Migrating to Office 365 is like a normal migration, besides the target is a cloud service which can be a bit special. There are two requirements that needs to be fulfilled on the migration servers before starting the migration to Office 365. Install the following (select the one that suits your operation system): MSOL Sign-in Assistant: 32 bit 64 bit MSOL Module for Windows PowerShell: 32 bit 64 bit The Admin Account Pooling Utility (AAPU) is used for getting better throughput performance. The AAPU tool provides a workaround by using different migration accounts for each migration thread, instead of having one migration account with a throttling limit, you could have ten migration accounts which would give 10 migration threads in total. You can have up to 10000 migration accounts (NME 4.7.0.82). If you are going to use the AAPU, you should add the parameter below into the NME Global Defaults or Task Parameters. [Exchange] O365UsageLocation=<xx> http://www.iso.org/iso/country_codes/iso_3166_code_lists/country_names_and_code_elements.htm For NME 4.7.0.82 the following text is stated in the release notes (always read them!): Office 365 Wave 15 Throttling: NME has been updated to better address the PowerShell Runspace throttling introduced in O365 Wave 15. In order to efficiently proceed with migrations to Wave 15, the tenant admin must submit a request through Microsoft to ease the PowerShell throttling restrictions. The tenant admin must open a service request with Microsoft and reference “Bemis Article: 2835021.” The Microsoft Product Group will need this information: tenant domain (tenant.onmicrosoft.com) version of Exchange (in this case, for Wave 15) number of mailboxes to be migrated number of concurrent admin accounts to be used for the migration number of concurrent threads to be used number of Runspaces to be created per minute* proposed limit (powershellMaxTenantRunspaces, powershellMaxConcurrency, etc.), and the number to which to increase the limit* * For the last two items in this list, the tenant admin should take the total number of threads across all migration machines and add a buffer, because it is difficult to predict the timing of the Runspace initiation. It is best to assume that all potential Runspaces could be created within a minute, so the values for both items should probably both be submitted as the total number. More information about migration performance and throttling can be found by reading the provided link at the end of this post. Network Ports Port In/Out Type Source Target Description 1352 Out Domino Quest NME servers All Domino mail serversDomino Qcalcon server Domino/Notes client (migration) 445 Out NetBIOS/SMB Quest NME servers All Domino mail serversDomino Qcalcon serverQuest NME master server Microsoft-DS/NetBIOS traffic for Migration. For reaching SMB shares. Note: Not required, but recommended. 389 Out LDAP Quest NME servers Active Directory DC server(s) LDAP 3268 Out LDAP GC Quest NME servers Active Directory DC server(s) LDAP Global Catalog 1025-65535 Out High-ports Quest NME servers Active Directory DC server(s)Exchange server(s) High-ports(differs depending on version) 1433 Out Microsoft SQL Quest NME servers Quest NME master server For reaching SQL DB 443 Out HTTPS Quest NME servers Office 365 Transferring migration content Notes from the field Network Monitoring or Wireshark may sometimes be your best friend during troubleshooting network connectivity. Portqry is another tool that could be of great value during initial network verification. Read through the release notes and the User Guide (PDF), it is included within the NME zip file. All information is collected into that document. Office 365 Migration Performance and throttling information Read the other parts Part 1: Migrations – Overview Part 3: Migrating Domino/Notes to Exchange 2013 On-premise Part 4: Migrating Domino/Notes to Office 365 Part 5: Migrating Resources Mailboxes, Mail-In databases and Groups Part 6: Prerequisites for Coexistence between Domino and Exchange 2013/Office 365 Part 7: Configuring Coexistence Manager for Notes with Exchange 2013 On-premise Part 8: Configuring Coexistence Manager for Notes with Office 365 Part 9: Prerequisites for Quest Migration Manager Part 10: Migrating User Mailboxes from Exchange 2003 to Exchange 2013 using Migration Manager Part 11: Migrating User Mailboxes from Exchange On-premise to Office 365 Feel free to comment the post, I hope you liked the information. If you find something that might be incorrect/other experiences, leave a comment so it can be updated.

-

NOTE: Cross posted to Technet Forums I have used the Dell Client Integration Pack to import warranty data into SCCM. I imported their Warranty report which gives me information on all Dell systems in the database. How can I go about limiting this report to return data only from a specific collection? SELECT v_GS_COMPUTER_SYSTEM.Name0 AS Name, DellWarrantyInformation.Service_Tag AS [serial Number], DellWarrantyInformation.Build, DellWarrantyInformation.Region, DellWarrantyInformation.LOB AS [Line of Business], DellWarrantyInformation.System_Model AS [system Model], CONVERT(varchar, DellWarrantyInformation.Ship_Date, 101) AS [Dell Ship Date], DellWarrantyInformation.Service_Level_Code AS [service Level Code], DellWarrantyInformation.Service_Level_Description AS [service Level Description], DellWarrantyInformation.Provider, CONVERT(varchar, DellWarrantyInformation.Start_Date, 101) AS [Warranty Start Date], CONVERT(varchar, DellWarrantyInformation.End_Date, 101) AS [Warranty End Date], DellWarrantyInformation.Days_Left AS [Warranty Days Left], DellWarrantyInformation.Entitlement_Type, CONVERT(varchar, v_GS_WORKSTATION_STATUS.LastHWScan, 101) AS [Last Hardware Scan], v_GS_SYSTEM_CONSOLE_USAGE.TopConsoleUser0 FROM DellWarrantyInformation LEFT OUTER JOIN v_GS_SYSTEM_ENCLOSURE ON DellWarrantyInformation.Service_Tag = v_GS_SYSTEM_ENCLOSURE.SerialNumber0 LEFT OUTER JOIN v_GS_COMPUTER_SYSTEM ON v_GS_SYSTEM_ENCLOSURE.ResourceID = v_GS_COMPUTER_SYSTEM.ResourceID LEFT OUTER JOIN v_GS_WORKSTATION_STATUS ON v_GS_SYSTEM_ENCLOSURE.ResourceID = v_GS_WORKSTATION_STATUS.ResourceID LEFT OUTER JOIN v_GS_SYSTEM_CONSOLE_USAGE ON v_GS_SYSTEM_ENCLOSURE.ResourceID = v_GS_SYSTEM_CONSOLE_USAGE.ResourceID WHERE (DellWarrantyInformation.Entitlement_Type <> '')

-

Afternoon, Recently, I have noticed that some our machines are not consistantly joining the domain as part of the task sequence. I find the issue is very strange, for example the same laptop can take multiple attempts before finally going through and completing the task sequence. The issue occurs with different laptop/desktop manufacturers and models. It also occurs with machines that have previously been sucessful through the task sequence. Our setup has been in place for 18 months and the task sequence has not been altered. The boot image has also not been altered and the correct network drivers are present. I have read that the network drivers in the driver package may need updating, however as the machines will occasionally work I am unsure as to whether that is the correct answer. Within the task sequence I have checked that the user account used to join the machine is correct and it has appropriate permissions. After reviewing the SMSTS logs I have noticed the following: Found network adapter "Intel® Ethernet Connection I217-V" with IP Address 169.254.101.136. TSMBootstrap 04/06/2015 12:32:50 2920 (0x0B68) So I think it must be failing due to the IP address. I am unsure as to why a 169.254 address would be issued. Can anyone explain why it picks up this address during the image build? Towards the end of the SMSTS log I can see the following: Retrying... TSManager 04/06/2015 12:47:36 2972 (0x0B9C) CLibSMSMessageWinHttpTransport::Send: URL: sccm-vm-wm1.XXXX.co.uk:443 CCM_POST /ccm_system_AltAuth/request TSManager 04/06/2015 12:47:36 2972 (0x0B9C) In SSL, but with no client cert TSManager 04/06/2015 12:47:36 2972 (0x0B9C) Error. Received 0x80072ee7 from WinHttpSendRequest. TSManager 04/06/2015 12:47:36 2972 (0x0B9C) unknown host (gethostbyname failed) TSManager 04/06/2015 12:47:36 2972 (0x0B9C) hr, HRESULT=80072ee7 (e:\nts_sccm_release\sms\framework\osdmessaging\libsmsmessaging.cpp,8936) TSManager 04/06/2015 12:47:36 2972 (0x0B9C) sending with winhttp failed; 80072ee7 TSManager 04/06/2015 12:47:36 2972 (0x0B9C) So I google'd the above and it is effectively telling me that machine can not speak to the DC in order to join the domain. Which makes sense as it has a 169.254 network. Hopefully someone may have seen something like this before. It is starting to cripple our productivity. Please let me know if any further information. Thanks in advance. Stephen

-

Hey all! I'm calling out for anyone who's had issues installing drivers on an Optiplex 7010 through SCCM. I have taken the task of getting SCCM to work within our local hospital and I've managed to get the Optiplex 780 and 790 to deploy correctly with no issue whatsoever. However, when I follow the exact process for the Optiplex 7010 the task sequence will fail once it is required to gain access to our software source and install all of the applications specified in the TS. On further inspection within the logs it's clear to see that the Ethernet driver has not installed at all which results in failure of deploying Windows 7. I have read that SCCM doesn't work well with Intel 825xx series network card although I can't prove the validity of this. I also read that NIC devices which require a special driver for WinPE may cause the TS to fail if the OS is Vista or newer. I'm not entirely sure where to go on from here currently. I don't want to have to capture the image of a 7010 physically so for now I'm ruling that option out unless there are now alternatives.

-

I have a question for you guys. Have any of you guys used sccm to image the Dell 7440 Ultrabooks with 16gb of ram in them? We have two models here, an 8gb model and a 16gb model. The 8gb ones image perfectly fine, the 16's fail out in great fashion. The 16's even install windows minus components... I'm just wondering if anyone has run into this. I tried to find logs, but there aren't any. When the image fails it fails with no log of anything happening.

-

I've been looking into finding a way to pull the warranty info of our pc's into SCCM. I found the Dell Client Integration Pack but don't know much about it. Does it work as it is advertised? Any gotcha's I need to know about before installing it on our SCCM server? Any tips? Does it actually pull the warranty of every Dell device and show in SCCM or some report? Sorry for so many questions, just don't know anything about it yet but it sounds like it could save me a bunch of time each year trying to provide replacement numbers to management.

-

Software in reference image is gone after deployment

MeMyselfAndI posted a question in Deploying Operating Systems

Hi All, I've created a reference image (windows 7 x64) a few months ago. This reference image also contains a few applications like Office 2013, Silverlight... I've been using this reference image for a few months now. I've installed it on several types of Dell clients and everything works fine. Last week, I received a new type of laptop (Dell Latitude E6540). I added the new drivers to the task sequence (apply driver package method) and started the installation. This went well, the task sequence finished without errors. I started the laptop and at first sight, everything looked ok, instead... at the add/remove programs, a lot of software was gone! Office, Silverlight... were not listed under add/remove programs! When I browse to program files (x86), the folders/files of the software are there. So... for some reason, this type of laptop breaks my reference image! I'm troubleshooting this issue for a week now, and don't know what to do anymore Can somebody give me some advice on this? Tanks in advance!- 3 replies

-

- deployment

- reference image

-

(and 2 more)

Tagged with:

-

Hello! I have been having an issue trying to get the correct storage drivers for a Dell T7400. I have used the Dell WinPE driver packs and the drivers that are listed on the Dell website. Has anyone been able to successfully pxe deploy this model? Every driver I have tried so far does not enable the hard drive to be seen in WinPE. I also have a T7500 and T7600 that is similar hardware that works. Could all the different versions of the drivers I have included conflict with each other? Any help would be appreciated.

-

- dell

- deployments

-

(and 4 more)

Tagged with:

-

Hello All, I'm trying to image a Dell Precision M6800. It PXE boots and pulls the boot wim fine but once it gets into the task sequence area it will just reboot. I can't seem to pull an IP address to it at all but it PXE boots fine. This is the only laptop that does this. Even the previous M6700 will boot via PXE and is able to image. I also have booted into all other Dell Machines fine. This is the first M6800 and its not letting me imagine it. I can't seem to figure out what is wrong. Is any one else having this problem with other computers? smsts.log

-

This will be a collection of posts, regarding migrations in general in the first post will digging deeper in the following posts. Published: 2013-05-09 (on www.testlabs.se/blog) Updated: 2013-05-15 Version: 1.1 Thanks for the great input and feedback: Hakim Taoussi and Magnus Göransson Part 1: Overview I will try to keep the first post not technical since this is more common sense then anything else. In short I want to summarize some key takeaways and recommendation to stick with, explaining them a bit more in detail below. Planning Information & communication Pilot migrations End-user training Experience Minimize the coexistence time Planning Some of you might think that… well of course we are planning. But sometimes I hear people that spend like 10-15% of their total project time for planning. I would recommend you to rethink if that’s the case, and suggest that you maybe should spend at least 50% of the time for it, maybe even more (in large projects). What I mean with planning is to create a detailed migration plan, this should of course include estimations regarding how many users can be migrated per hour, how much data can be transferred per hour. Basically what this means is that the planning phase should be used for planning and verifying that everything is in place and works like it’s expected to do. For example, in the official guide from Quest Software when migrating from Domino to Exchange they calculate of 5GB/hour/migration server during good conditions. In the real world I’ve seen throughput of 20GB/h/server. With this said, it all depends… (the consultants favorite phrase) This is one of those things that needs to be tested and verified before creating a detailed migration plan, for doing a good estimation. Don’t forget to verify that the target environment have enough capacity, servers and storage. Other questions that needs clear answers can be; How is users and mailboxes provisioned? During the migration, where should new mailboxes be created? Is there information in the user attributes that needs to be migrated from Domino into AD? How will the migration process work? What requirements are there? So for the planning, think about all steps. Information & Communication With information I mean to inform everybody that’s involved in the project in one or another way. This would include the helpdesk and support, since these are the projects closest friends for helping and taking care of incidents. On the other hand we have the users themselves, here I’m talking about the end-users. If the migration will impact the users in a way they are not used to, remind to inform them a couple of weeks before they are going to be migrated, with a reminding notification a couple of days when the migration will take place. During a transition from for example, Exchange 2007 to Exchange 2010, there won’t be much impact on the users, it’s more a data transfer and updating a couple of attributes in the directory so the impact is very small. In those transition projects (it depends on the customer requirements) the needs for user reminders is not that big as the migration projects. But keep in mind, it’s better they get too much information than too little. In large projects it’s a recommendation to place the information on public places like the restroom and the lunch room. Also inform the people on every place that’s possible, intranet, mail, letter, meeting and so on. In short I want to say the obvious, if the information is lacking or poor, the experience from the end-user perspective will be poor. In the end this give the result of a failed project, at least from a user perspective. Pilot migrations From the projects I’ve been a part of I’ve learnt lots of things and gained experience. One of these things is to have a good pilot, I would recommend to divide the pilot into 3 parts. Part 1 is the “Technical Pilot”, this would include the closest project members and/or only technical people that can handle issues and problems when they occur. Part 2 is the “Pilot 1” and this would include at least 10 users, spread throughout the organization, the more spread they are the better value would the pilot have. Part 3 is called “Pilot 2”, this is started when the “Pilot 1” phase is completed and the evaluations are done. Maybe some tweaking needs to be done before starting this stage (if there were issues and errors). In “Pilot 2” should at least 50 people be included throughout the organization, this last Pilot phase is used for solving any issues that occurred in previous stages, this for minimizing the impact when the real migration phase will take place. The numbers above is just examples, but might be good examples for a environment with a couple of thousand users. Before starting with “Pilot 2” the whole migration process, how object get provisioned should be well documented. It would be a recommendation to have it documented even in the “Technical Pre-Pilot”, but my experience tells me that things are changing and somewhere during “Pilot 1” the processes are getting tested and documented. End-user training As this is mentioned, in some cases it might not be needed, for instance if the moved users still keeps the same Outlook client version and the impact is very low. As we all know things are changing over time with new versions and if the user used for example Outlook 2003 with Windows XP and will be upgraded to Windows 7 and Outlook 2013, there might be a reason for giving the users a training session and some documents with instructions on how things work in the new version. If the users are migrated for example from Domino/Notes to Exchange/Outlook I would strongly recommend having training sessions were the users can attend and also bringing instructions on how things differs between Notes and Outlook, and how Outlook should be used for booking a meeting, sending a mail etc. This for making sure that the users gets a good experience and can handle the new tools. Minimize the coexistence time I’m not writing this because of lack due to products out there or the functions of them. But I’m writing this bullet for having a smoother and easier understanding, mostly for the helpdesk and the end-users. During a coexistence (freebusy/mail flow/directory synchronization) time it can be hard to troubleshoot and isolate incidents and problems. Another good reason for minimizing the coexistence time is regarding all shared resources, by minimizing the coexistence time you will reduce the impact for the end-users. So for minimizing these hours spent on troubleshooting and the work effort everyone need to put in, I would recommend to keep the coexistence time as short as it can be, without impacting the experience or business in a bad way. In short I would say, if things are working. Keep up a good pace for having a short coexistence time! Experience Last but not least, I would recommend you to select careful what project members are selected or which company that runs these kind of projects. It’s very important that they have the full understanding of what needs to be done and what impact it has for everyone involved but also the business itself. If using Quest Software, they have a requirement of using certified people for designing, installing and configuring their products. This for making sure that the result will be good and that everyone should be satisfied with it. I’m not sure about other vendors but I think they have something similar to this model. Read more Part 2: Prerequisites for Domino/Notes migrations Part 3: Migrating Domino/Notes to Exchange 2013 On-premise Part 4: Migrating User Mailboxes from Domino/Notes to Office 365 Part 5: Migrating Resources Mailboxes, Mail-In databases and Groups Part 6: Prerequisites for Coexistence between Domino and Exchange 2013/Office 365 Part 7: Configuring Coexistence Manager for Notes with Exchange 2013 On-Prem Part 8: Configuring Coexistence Manager for Notes with Office 365 Part 9: Prerequisites for Migration Manager Part 10: Migrating User Mailboxes from Exchange 2003 to Exchange 2013 using Migration Manager Part 11: Migrating User Mailboxes from Exchange On-Premise to Office 365 I hope these key takeaways gave you some good insight and some things to think about. I would be happy to hear your comments/feedback this post. The plan is to post a new article every second week, keep your eyes open Regards, Jonas

-

- Exchange

- Migrations

-

(and 3 more)

Tagged with:

-

Hello, I have a ~8 mb bootable iso that contains the Dell diagnostics tools for laptops. Is it possible to somehow use this iso to boot into the diagnostic tool from PXE using SCCM 2007?

-

I am attempting to import Dell E6510 CAB drivers into SCCM 2012 but everyone of them fails except for the ST Micro driver. Upon completing the import wizard the failure gives the following error: The selected file is not a valid Windows device driver. In the DriverCatalog.log on the SCCM server I see the following: This driver does not have a valid version signature. Failed to initialize driver digest. Code 0xe0000102 Is there a setting in SCCM to accept unsigned drivers during the import process or something that I am missing? Any help would be greatly appreciated!

-

Does anyone know if there is a way to skip the Set BIOS password step in an OSD if there is already a password set. I want the task sequence to add the BIOS password if non-existent and skip it if it is. Thanks, Mike

-

Hello I'm having a bit of trouble deploying Win7 to an OptiPlex 790. I have added the NIC driver to WinPE. It boots into WinPE, starts the TS, applies the OS, applies the the OptiPlex 790 driver package(lates cab imported from Dell). On the next step after applying drivers, "Setup Windows and ConfigMgr" after it reboots it fails with error code 80007002. Pressing F8 reveals that it has no network. After it fails and restart, I can log on to Windows. everything seems OK except that there is no network driver. I can manually load the network driver, the same I use in the driver package, and it installs it just fine.... It's only the 790 that has that problem, the 780 works just fine. Has anyone experienced anything like this?

- 4 replies

-

- Dell

- OptiPlex 790

-

(and 2 more)

Tagged with:

-

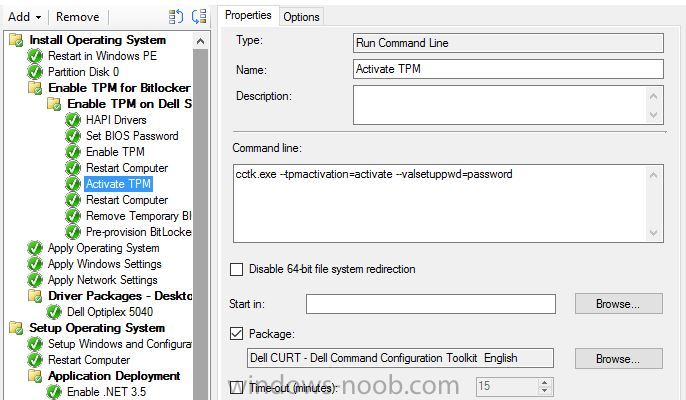

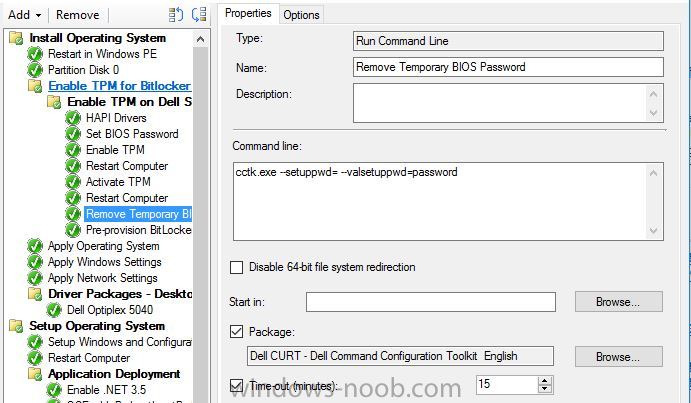

I have finally figured out the TS to set a BIOS password, enable and activate the TPM... However, the built in 'Enable Bitlocker' step fails, with no error codes, and no logs. It appears to successfully complete, but it doesn't. I think I may need a script to take ownership. All of the scripts that I am running work beautifully within the OS via cmd, but through configmgr, not so good. Any help/advice would be great. Thanks!

-

I am trying to enable and activate the TPM chip on the Dell machine's we have. So far I have created the CCTK package, pushed it to my DP, etc, but it keeps failing at setting the BIOS password. I have been unable to get my task sequence to complete. Everything that I have read so far seems to lead me in the same direction, and yet I can get nothing to cooperate.

- 3 replies

-

- ConfigMgr 2012

- Dell

-

(and 3 more)

Tagged with:

-

Can anyone give me a few tips on how to achieve this? I have an .exe file that I downloaded from DELL; I can use the -NOPAUSE parameter to pass to this file, to force a shutdown and to disable notifications. I created a package and I deployed it to a collection (as required) that queries all my devices with a BIOS less than 'x'. The collection query process works fine as it shows the only DELL machine that has a BIOS version less than 'x'; however, the package is sent to the computer, but it doesn't actually run. Any ideas on how to get this to work? Thanks!